Vitis AI Library Examples

This section is part of a series focused on utilizing the DPUCZDX8G Deep Learning Processor Unit (DPU), a programmable engine optimized for convolutional neural networks (CNNs), within the Vitis AI environment. Here, I will demonstrate how to use the Vitis AI neural network libraries, based on the TensorFlow framework, for practical AI applications. As examples, ResNet50 for image classification and YOLOv3 for object detection from the Vitis AI Model Zoo are used. All tests are conducted on the Trenz TE0820-03-2AI21FA (ZU+ 2CG) module with the TE0703-06 carrier board, using a connected Logitech C270 USB camera.

This series covers key steps for enabling efficient AI acceleration on embedded platforms, including:

By following this guide, can be gained practical insights into designing, deploying, and running AI workloads on Zynq UltraScale+ MPSoCs using Vitis AI.

All sources are available for free into my repository. The repository consists of example for to ZU+ devices (2CG and 4EV, Trenz modules TE0820-03-2AI21FA and TE0820-05-4DE21MA) and allows to create Vivado Hardware Design and deploy Linux by using Petalinux for Vitis AI enviroment. The structure of the repository:

board/ - Vivado block design and project configuration tcl

petalinux/ - Petalinux project configuration

ip/ - DPU IP core sources

scripts/ - some helper scripts

My host enviroment:

2. Vitis 2022.2 installed in Ubuntu 20.04

3. Petalinux 2022.2

How to start. Since Linux is booted X11 forwarding (SSH protocol) can be used for running graphical applications on the target.

[host]:~/edu-vitis-ai$ ssh -X root@192.168.1.123

Firstly let’s check that DPU IP core is initialized by using xdputil tool

[target]:~# xdputil query

The output provides device information, including DPU, fingerprint, and Vitis AI version

Runing pre-trained ResNet50 example. In rootfs there is already pre-trained and compiled version of ResNet50 CNN for image classification. In order to run the example compiled and optimized file of deep learning model should be copied. Afterwards The example can be executed by using the model and one of the images in img/ folder

[target]:~# cd app

[target]:~/app# cp model/resnet50.xmodel .

[target]:~/app# samples/bin/resnet50 img/bellpeppe-994958.JPEG

Expected failure appears, warning about wrong fingerprint for the model. The model was compiled for DPU B4096 architecture, but in 2CG device B1152 architecture is utilized.

Re-compiling ResNet50 xmodel for B1152 architecture. Re-compiling existing xmodel requires Vitis AI 3.0 version and Docker for WSL2. and done by leveraging prebuilt Vitis AI Docker container vitis-ai-tensorflow-cpu. Also arch.json file, that consists of information about integrated DPU, should be copied into Vitis AI/. The file will be used for model re-compilation.

[host]:~$ git clone -b 3.0 https://github.com/Xilinx/Vitis-AI.git

[host]:~$ cd Vitis-AI/

[host]:~Vitis-AI/$ cp ~/edu-vitis-ai/build/te0820-2cg-xsa/top.gen/sources_1/bd/sys/ip/sys_dpuczdx8g_0_0/arch.json .

[host]:~Vitis-AI/$ docker pull xilinx/vitis-ai-tensorflow-cpu:latest

[host]:~Vitis-AI/$ ./docker_run.sh xilinx/vitis-ai-tensorflow-cpu:latest

The output screen should be like on the picture below

For re-compilation ResNet50 the quantized model of ResNet50 is required. The link for downloading quantized model can be found in model.yaml file, that is placed in Vitis-AI/model_zoo/model-list/ for appropriate framework and pre-trained CNN. In case of ResNet50 for image classification tf_resnetv1_50_imagenet_224_224_6.97G_3.0/model.yaml is utilized.

[docker]:workplace$ wget https://www.xilinx.com/bin/public/openDownload?filename=tf_resnetv1_50_imagenet_224_224_6.97G_3.0.zip -O tf_resnetv1_50.zip

[docker]:workplace$ unzip tf_resnetv1_50.zip

[docker]:workplace$ cd tf_resnetv1_50_imagenet_224_224_6.97G_3.0/

File of quantized model is quantized_baseline_6.96B_919.pb. The file will be used in order to re-compile ResNet50 xmodel for current DPU configuration by utilizing copied earlier arch.json file. Re-compiled model will be stored in out/ directory and should be copied on the target.

[docker]:workplace/tf_resnetv1_50_imagenet_224_224_6.97G_3.0$

vai_c_tensorflow --arch ./../arch.json -f quantized/quantized_baseline_6.96B_919.pb --output_dir out -n resnet50

[docker]:workplace/tf_resnetv1_50_imagenet_224_224_6.97G_3.0$ scp out/resnet50.xmodel root@192.168.1.123:~/app

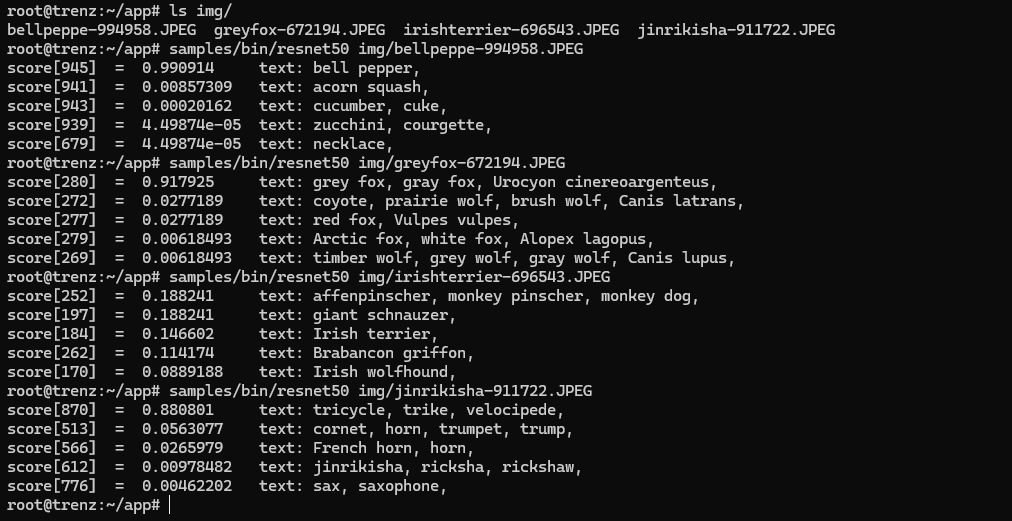

After runing the application one more time with updated resnet50.xmodel, the output for different images should be

Object detection with YOLOv3 and USB Camera. Object detector YOLOv3 is implemented by using Tensorflow framework. Sources to download are listed in Vitis-AI/model_zoo/model-list/tf_yolov3_voc_416_416_65.63G_3.0/model.yaml file. Should be downloaded quantized model and xmodel for zcu102 & zcu104 & kv260. Also package vitis_ai_library_r3.0.x_images.tar.gz for examples is required.

[host]:~Vitis-AI/$ wget https://www.xilinx.com/bin/public/openDownload?filename=tf_yolov3_voc_416_416_65.63G_3.0.zip -O yolov3.zip

[host]:~Vitis-AI/$ wget https://www.xilinx.com/bin/public/openDownload?filename=yolov3_voc_tf-zcu102_zcu104_kv260-r3.0.0.tar.gz -O yolov3.tar.gz

[host]:~Vitis-AI/$ wget https://www.xilinx.com/bin/public/openDownload?filename=vitis_ai_library_r3.0.0_images.tar.gz -O vitis_ai_library_images.tar.gz

[host]:~Vitis-AI/$ ./docker_run.sh xilinx/vitis-ai-tensorflow-cpu:latest

All three archives yolov3.zip, yolov3.tar.gz and vitis_ai_library_images.tar.gz will be unzipped in the docker container. After unzipping vitis_ai_library_images.tar.gz folder samples/yolov3 appears with images for testing yolov3 detector. Content of the folder should be copied into example/ folder to the sources of the YOLOv3 detector and archive for the target will be created.

[docker]:workplace$ unzip yolov3.zip

[docker]:workplace$ tar -xzvf yolov3.tar.gz

[docker]:workplace$ tar -xzvf vitis_ai_library_images.tar.gz

[docker]:workplace$ cp -r samples/yolov3/* examples/vai_library/samples/yolov3/

[docker]:workplace$ tar -czvf yolov3_target.tar.gz -C examples/vai_library/samples/yolov3/ .

Re-compiling YOLOv3 detector for B1152 DPU architecture. The process of recompiling quantized model for Tensorflow framework is the same as for ResNet50. The model out/yolov3_voc_tf.xmodel will be created. Afterwards the archive yolov3_target.tar.gz, re-compiled model and prototxt config file will be copied on the target

[docker]:workplace$ cd tf_yolov3_voc_416_416_65.63G_3.0/

[docker]:workplace/tf_yolov3_voc_416_416_65.63G_3.0$

vai_c_tensorflow --arch ./../arch.json -f quantized/quantize_eval_model.pb --output_dir out -n yolov3_voc_tf --options "{'input_shape':'1,416,416,3'}"

[docker]:workplace/tf_yolov3_voc_416_416_65.63G_3.0$ scp out/yolov3_voc_tf.xmodel ../yolov3_voc_tf/yolov3_voc_tf.prototxt root@192.168.1.123:~

[docker]:workplace/tf_yolov3_voc_416_416_65.63G_3.0$ scp ../yolov3_target.tar.gz root@192.168.1.123:~

Runing YOLOv3 detector examples. On the target sources for YOLOv3 detector example from the archive should be unzipped and compiled (compiling takes some time). Examples for YOLOv3 detector can be executed.

[target]:~# tar -xzvf yolov3_target.tar.gz -C ~/

[target]:~# chmod +x build.sh

[target]:~# ./build

[target]:~# ./test_jpeg_yolov3 yolov3_voc_tf.xmodel sample_yolov3.jpg

For Logitech C270 USB camera

[target]:~# ./test_video_yolov3 yolov3_voc_tf.xmodel 0 -t 2

Conclusion. In this section, was demonstrated the execution of two key examples from the Vitis AI Model Zoo: ResNet50 for image classification and YOLOv3 for object detection. Both models were recompiled to support the DPUCZDX8G IP core with the B1152 architecture, instead of the default B4096, and were deployed on the Trenz TE0820-03-2AI21FA module with the TE0703-06 carrier board. This guide provides a step-by-step approach to running Vitis AI models on a custom hardware setup, but the principles and procedures outlined can be applied to any other Zynq UltraScale+ (ZU+) devices. By following this process, users can easily adapt different models from the Vitis AI Model Zoo or custom-trained models to their specific hardware configurations for efficient AI acceleration.

Sources:

1. Vitis AI Library User Guide (UG1354)

2. DPUCZDX8G for Zynq UltraScale+ MPSoCs Product Guide (PG338)